|

I am a PhD candidate at the Computer Vision Lab at ETH Zurich under the supervision of Luc Van Gool (ETH Zurich) and Federico Tombari (Google). My research primarily focuses on uncertainty quantification in deep neural networks. I am specifically interested in efficiently estimating epistemic uncertainty of neural networks. Moreover, I have also worked on neural compression algorithms and generative models for point cloud data. |

|

|

|

|

|

|

|

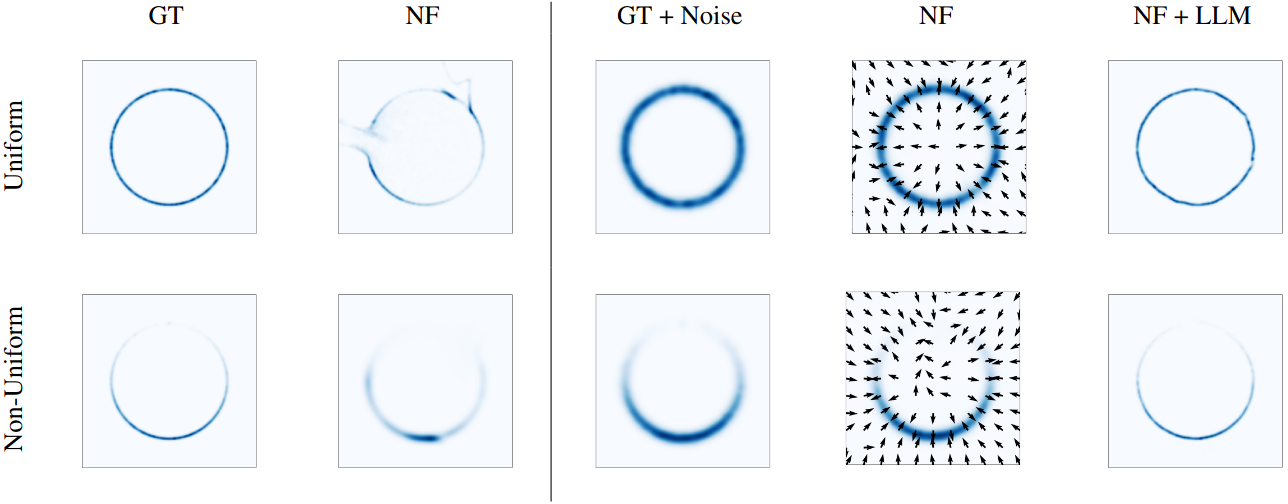

Janis Postels, Martin Danelljan, Luc Van Gool, Federico Tombari International Conference on 3D Vision 2021, 2022 paper We propose an approach for sampling from lower dimensional manifolds using normalizing flows. Moreover, we introduce an improved version of our algorithm tailored to 3D point clouds. |

|

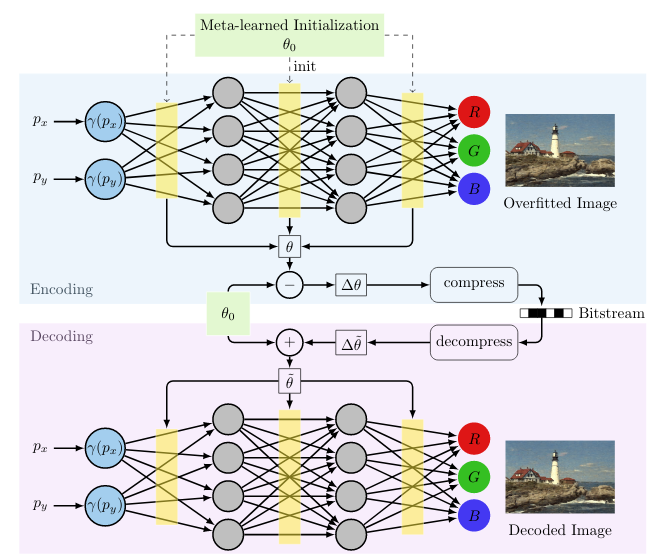

Yannick Struempler*, Janis Postels*, Ren Yang, Luc Van Gool, Federico Tombari European Conference on Computer Vision, 2022 paper code We propose a neural image compression algorithm by leveraging neural implicit representations and meta-learning. |

|

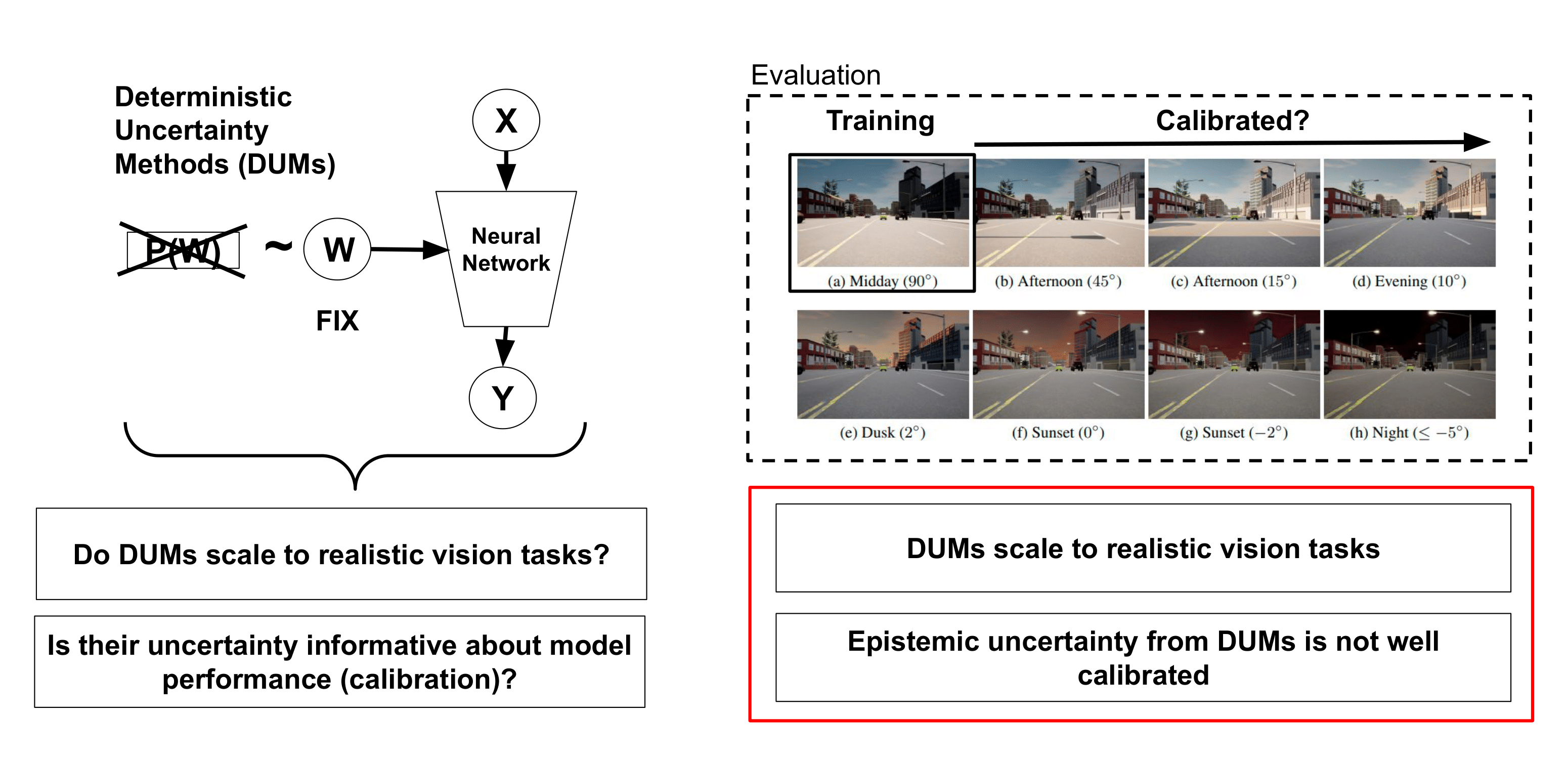

Janis Postels*, Mattia Segu*, Tao Sun, Luc Van Gool, Fisher Yu, Federico Tombari International Conference on Machine Learning, 2022 paper code We show that the uncertainty predicted by a recent family of methods for uncertainty estimation which treat the weights of a neural network deterministically is poorly calibrated. |

|

Tao Sun*, Mattia Segu*, Janis Postels, Yuxuan Wang, Luc Van Gool, Bernt Schiele, Federico Tombari, Fisher Yu Conference on Computer Vision and Pattern Recognition, 2022 paper code website We introduce a synthetic dataset for multi-task learning that allows fine-grained control over continuous distributional shifts. |

|

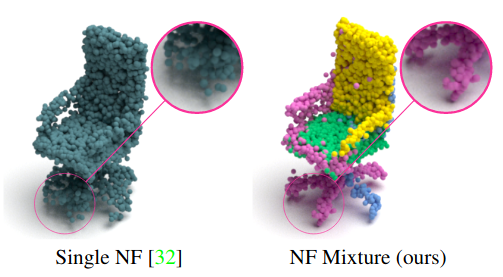

Janis Postels, Mengya Liu, Riccardo Spezialetti, Luc Van Gool, Federico Tombari International Conference on 3D Vision, 2021 paper code We mitigate drawbacks of prior generative models based on normalizing flows for point clouds by introducing a mixture of normalizing flows. |

|

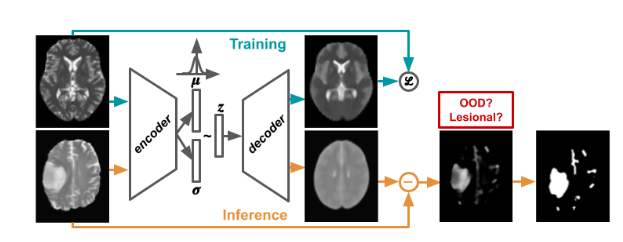

Matthäus Heer, Janis Postels, Xiaoran Chen, Ender Konukoglu, Shadi Albarqouni Medical Imaging with Deep Learning, 2021 paper We demonstrate the vulnerability of recent approaches based on VAEs for unsupervised anomaly detection in medical images. |

|

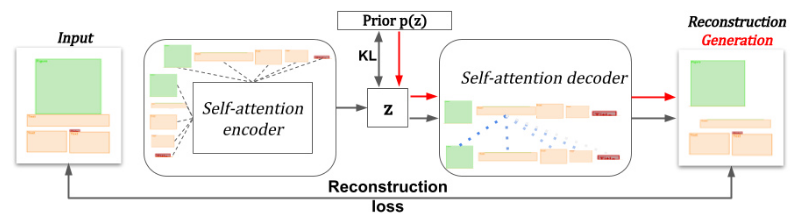

Diego Martin Arroyo, Janis Postels, Federico Tombari Conference on Computer Vision and Pattern Recognition, 2021 paper We introduce a generative model for layouts based on VAEs and attention layers and demonstrate its strong inductive bias. |

|

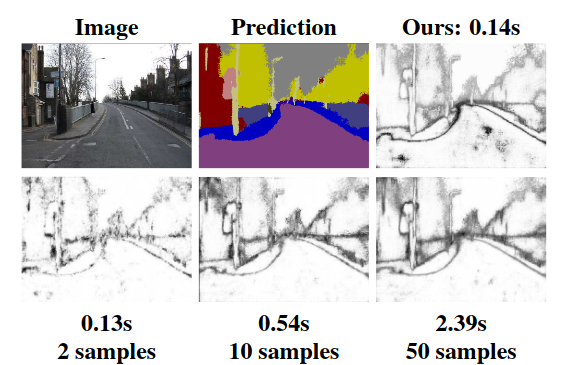

Janis Postels*, Hermann Blum*, Yannick Strümpler, Cesar Cadena, Roland Siegwart, Luc Van Gool, Federico Tombari arXiv, 2020 paper We demonstrate that the uncertainty of a neural network can be quantified using the distribution of its hidden representations. |

|

|

Janis Postels, Francesco Ferroni, Huseyin Coskun, Nassir Navab, Federico Tombari International Conference on Computer Vision (ORAL), 2019 paper code We propose a method to estimate the uncertainty of Bayesian neural networks in a single forward pass by applying error propagation. |

|

|

|

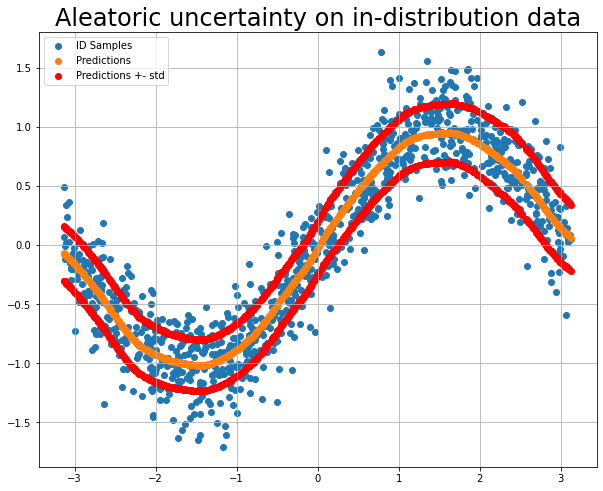

Blogpost colab In this blogpost I first give an introduction to the commonly considered types of uncertainty. Then, I demonstrate on toy regression and classification examples how one can separate these types of uncertainties using Bayesian Neural Networks. |